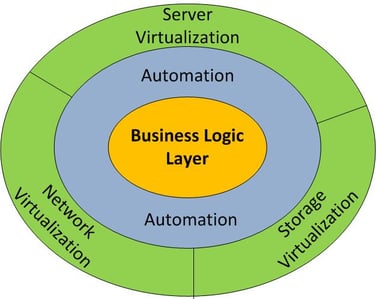

In part 1 of this series of four posts, we examined the grand vision of the software-defined datacenter (SDD). In this second post of the series, we will take a look at the core components of the SDD (see Figure 1) and provide a brief evaluation of how mature these components currently are.

- Figure 1 - Core Components of the Software-Defined Datacenter

a) Network Virtualization

Creating the required network is a common reason for high provisioning times of new application environments. While it typically takes minutes to provision even a very large number of virtual machines, the requested networking resources often have to be created and configured at least semi-manually from multiple management interfaces. Not only does this process require advanced networking skills, but it can also lead to provisioning errors that can cause security issues. Software-defined networking (SDN) allows the user to simply specify which servers have to be connected and what the relevant SLAs are. The software then figures out the most efficient way of fulfilling these requirements without the typical configuration-intensive process.

Take a look at the following vendors: Cisco Systems, Nicira (now part of VMware), BigSwitch Networks, Lyatiss, Xsigo Systems

VMware’s acquisition of SDN-company Nicira and Oracle’s purchase of Xsigo, as well as significant initial rounds of funding for multiple network virtualization and startups such as Big Switch, demonstrate that we are at the dawn of the age of network virtualization. We could compare today’s state of network virtualization with the status of server virtualization a little more than a decade ago. However, we can expect market leaders such as Cisco Systems to adopt the concepts and protocols of network virtualization, such as OpenFlow, and therefore remain more relevant than server hardware vendors over the past decade. Cisco’s significant investment in its own SDN startup, Insieme, is a great indicator that the company does not intend to allow its networking products to be commoditized without a fight.

VMware and Cisco or VMware vs. Cisco?

Many would regard VMware’s Nicira acquisition as an act of hostility toward Cisco Systems. VMware is basically spending $1.26 billion on acquiring a startup that aims at shaving off the margins of Cisco’s bread and butter business. Of course, the Nicira acquisition is a long-term investment aimed at eroding Cisco’s business only gradually; however, Steve Herrod’s quote definitely applies: “If you’re a company building very specialized hardware … you’re probably not going to love this message.”

At the same time, Cisco is doing the right thing, expanding its leadership position by rapidly increasing its own SDN capabilities. The Open Network Environment constitutes Cisco’s initiative, based on the Nexus 1000V switch, to make its networks open, programmable, and application-aware.

b) Server Virtualization

Pioneered by VMware over a decade ago, server virtualization is the most mature of the three SDD components. Today, we see a trend toward organizations adopting multi-hypervisor strategies, in order not to depend on any one virtualization vendor and to take advantage of different cost and workload characteristics of the various hypervisor platforms. Configuration management, operating system image lifecycle management, application performance management, and resource decommissioning are currently the most significant server virtualization challenges. Many enterprise vendors, such as HP, CA Technologies, IBM, BMC, and VMware, currently offer solutions addressing these challenges.

c) Storage virtualization

Similar to SDN, storage provisioning has traditionally constituted a significant obstacle for many IT projects. This is due to the often complex provisioning process that involves many manual communication steps among application owner, systems administrator, and the storage team. The latter has to ensure storage capacity, availability, performance, and disaster recovery capabilities. Often storage is overprovisioned to “be on the safe side.” Overprovisioning storage is expensive, as most organizations pay a significant premium to purchase a brand-name SAN. Today, when buying SAN storage, there typically is a significant brand loyalty and very little abstraction of storage hardware from management and features. As in the instance of networking, when bundling hardware and software, vendors are able to achieve significantly higher markups compared to cases where hardware and management software are unbundled.

Take a look at the following vendors: Virsto, Nexenta, iWave, DataCore Software

The concept of the “storage hypervisor” is currently gaining more and more traction. Similar to the SDN and server virtualization, the storage hypervisor enables customers to purchase any brand of hardware and manage it via a centralized software solution. Abstracting the management software from the SAN allows customers to manage multiple storage types and brands from one single software interface. Advanced features, such as high performance snapshotting, high availability across multiple geographical locations, data stream de-duplication, and caching, are unbundled from the actual storage hardware, allowing customers to add on additional storage arrays of any brand, whenever needed.

The EMC – VMware Dilemma

EMC’s ownership of VMware can be seen as a strong inhibitor when it comes to advancing storage virtualization, as EMC is certainly not interested in having its hardware margins taken away. Therefore, VMware has little incentive to contribute to the advancement of the hardware-independent storage hypervisor. However, we are now at a point where the company must face reality and acknowledge that storage hardware commoditization is inevitable. The significant investments of venture capital firms in storage virtualization companies - Virsto, Nexenta, Nutanix, NexGen Storage, PistonCloud, and many more – demonstrate the inevitability of this development.

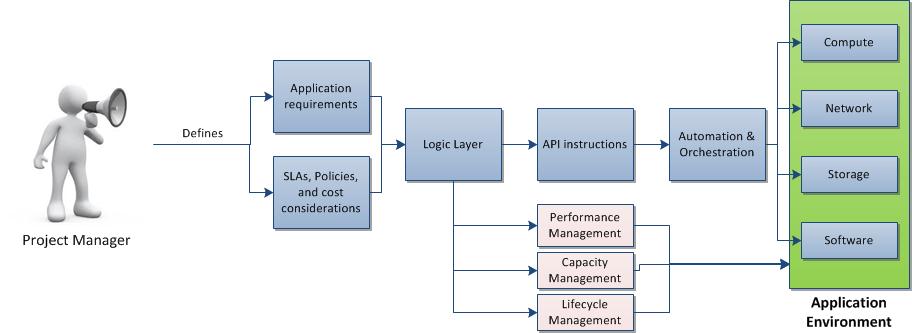

d) Business Logic Layer

As a reminder from last week’s post on the basics of the SDD, a business logic layer is required to translate application requirements, SLAs, policies, and cost considerations into provisioning and management instructions that are passed on to the automation and orchestration solution. This business layer is a key requirement for the SDD, as it ensures scalability and compatibility with future enterprise applications, so that customers do not have to manually create new automation workflows for each existing or new application. The business logic layer is the “secret sauce” that is essential for tying together network virtualization, server virtualization, and storage virtualization into the SDD.

Without the ability to automatically translate application requirements into API instructions for datacenter automation and orchestration software, there will be too many manual management and maintenance tasks involved to dynamically and efficiently place workloads within the programmable network, server, and storage infrastructure.

Translating Business Logic into Automation Instructions

Currently, IT process automation vendors are working hard on making their products more accessible to the business. Easier-to-use workflow designers and flexible connectors for myriad current enterprise applications are one step into the right direction. However, to truly enable the SDD, IT process automation will have to progress significantly over the next few years.

The journey to the SDD will be a long one. At its end point, enterprise IT will have become truly business-focused, automatically placing application workloads where they can be best processed. We anticipate that it will take about a decade until the SDD becomes a reality. However, each step of the journey will lead to efficiency gains and make the IT organization more and more service oriented.

In next week’s post, I will discuss some of the current controversies and challenges around the SDD, such as blades vs. “pizza boxes,” intelligent hardware vs. commodity hardware, and open architecture vs. vendor-specific stacks.