It’s always like that in enterprise IT. There’s this incredible new technology that lets you do things you could never even fathom possible. But then, once the honeymoon is over, the old problems come back to bite you with a vengeance.

Data Protection Reloaded – This Time it’s Non-Trivial

Now let’s translate this scenario to our favorite topic today: Cloud. EMA research has shown that now that developers are able to rapidly provision unbelievable numbers of virtual machines in an incredibly small amount of time, seemingly trivial and old-fashioned topics such as “data protection” take center stage again. In the days of physical servers, traditional agent-based backup tools would do just fine. When new server hardware was purchased, the backup agents were installed. But once developers started provisioning their own VMs, for example through the vCloud API, IT operations was in a constant race to detect these new machines and apply the appropriate protection policy. Even temporary VMs need to be protected, as significant time and effort could be lost, in the case of development environments becoming unavailable. And we all know how often even production environments fly under the backup radar, until disaster strikes.

Enter VMware vSphere Data Protection

VMware offers vSphere Data Protection (VDP) –based on EMC Avamar– for small vSphere environments for free. The “advanced” version of VDP lifts the limit of 100 VMs, but still offers merely basic backup and restore capabilities that most midsized to large enterprises will not find sufficient. Therefore, VMware provides its customers with the freedom to pick their own data protection tool and simply connect to the vSphere API for Data Protection (VADP). VADP enables agentless backup and restore on a file, volume or VM level and without significant performance impact. VADP supports full, differential and incremental backup and restore of virtual machines.

Now We Need to Finish the Job: Resiliency 2.0

A significant advantage of using an established data protection tool together with the VADP API is that these traditional tools at the same time are able to protect the data center’s physical infrastructure. This means that you can centrally control all of your backups across the entire data center. For example, existing customers of IBM’s Tivoli Storage Manager can now take advantage of the tool’s edition for virtual environments (TSM VE), which offers a set of virtualization and cloud specific capabilities, such as change block tracking, compression, de-duplication and progressive incremental techniques to minimize backup data.

Why Not Take this a Little Further: Resiliency 3.0, powered by Software Defined Storage

Advanced data protection capabilities –high availability, snapshots, replicas, clones, VMware stretched clusters, CDP- today are still dependent upon your SAN. Ok, now I will go off on a little rant, please skip it if you like, but I promise, there is some good research data in there:

“Storage features and capabilities are still dependent upon the brand and type of storage employed, each coming with its own proprietary software. While all major storage vendors offer ’storage virtualization’, these storage hypervisors typically only can pool and provision the vendor’s own proprietary storage hardware. At the end of the day, with each SAN purchase the customer pays again for features that are enabled by the software that is bundled with the SAN hardware, such as snapshots, clones, thin provisioning, storage tiering, high availability, deduplication, compression and so on.

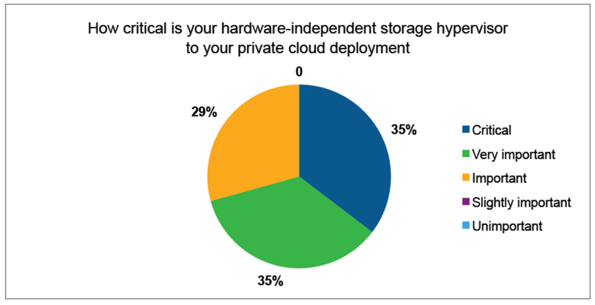

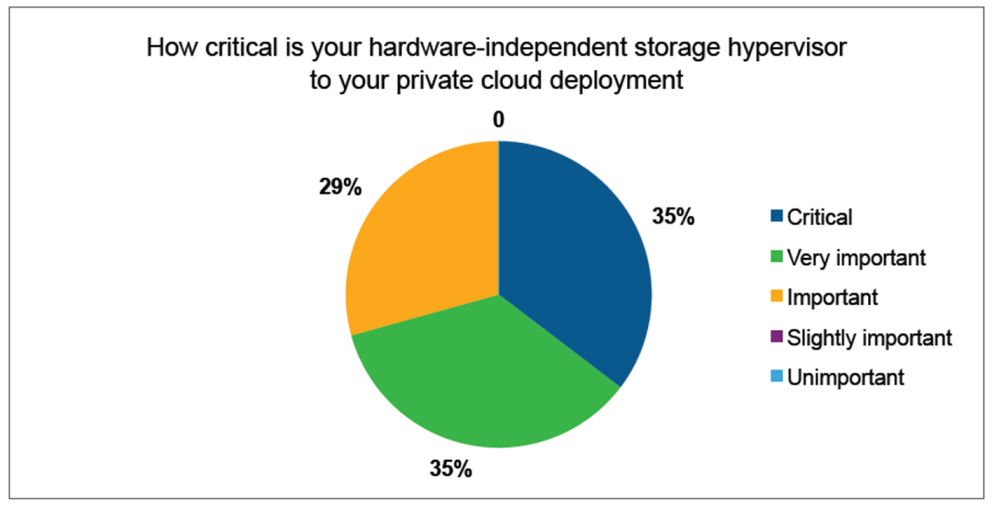

The best way to illustrate what’s going on in storage today is to draw the comparison to VMware or Microsoft telling you on which server hardware to run their virtualization stack. Or why not go further and have VMware sell rack servers and blades that work best with their own hypervisor. Of course this makes no sense, but that is exactly what is going on in storage still. Finally, customers today are growing tired of this storage lock-in and they are starting to look outside the box, for truly hardware independent software defined storage that is able to tie together existing storage of any type, age or brand. Here’s some unambiguous research data to back up my claim: 100% of organizations that have a hardware independent storage hypervisor in place find this tool critical (29%), very important (35%) or important (35%) to private cloud success. Not a single study respondent selected ’slightly important’ or ‘unimportant’, which is a remarkable result that is rare in its clarity.”

DataCore Software’s SAN Symphony and IBM’s SmartCloud Virtual Storage Center are examples for hardware-independent storage virtualization solutions. Both tools virtualize and pool storage arrays of any brand and enable automated storage volume provisioning, as well as advanced storage features such as auto-tiering, high availability, CDP and snapshots. IBM’s SmartCloud Virtual Storage Center offers a cloud portal for self-service storage provisioning. Both the IBM and the DataCore storage virtualization solutions establish feature parity between storage hardware types and brands through one central management console. This feature parity paired with hardware independence embodies the true spirit of storage virtualization. If a vendor tells you that you should buy a specific storage array to “best” take advantage of its storage virtualization solution, you should run and run fast.

Data Protection in the Age of Cloud: Coping with the Sharknado™

The more complex the IT environment, the more important is a centrally managed platform for data protection and application availability. In other words, we need one central backup tool for physical, virtual and cloud environments and we have to finally rid the IT organization of the “tyranny” of the SAN. Once we have truly abstracted storage software from the underlying commodity hardware, we can ensure application and data availability across the entire data center with its physical, virtual and cloud resources, no matter the storage back end.