This year’s DevOps Enterprise Summit (DOES) in San Francisco was carried by the enthusiasm of 1500 practitioners who were genuinely enthusiastic about how DevOps can transform their enterprises into a ‘digital attacker.’ Digital attackers rely as DevOps as their innovation engine to rapidly release high quality software that offers measurable business value. In short, digital attackers bully their competition by offering best in class customer value on an continuous basis and in a cost effective manner.

DevOps Enterprise Summit Top 7 - Dojos, Pizzas, Cattle, Batch Jobs and More

1 - DevOps is a Lifestyle - Dojos Replace the DevOps Team

Focusing DevOps expertise in a team, where a number of developers and operators are selected to coordinate the software release and management lifecycle, has not worked out for most enterprises, as DevOps is not a project but a ‘lifestyle.’ Unless the entire organization ‘lives and breathes’ the key DevOps principles of communication, collaboration, automation, and joint responsibility, DevOps will constantly be inhibited by boundaries, conflict, and frustration.

The DevOps Dojo concept focuses on providing a corporate ‘center of excellence,’ for mentoring all developers, operators, and business staff, enterprise-wide. The Dojo guides staff to fill in knowledge and skill gaps in areas that help the individual better take advantage of the corporate resources and to better understand the impact of their own actions. For example, when a developer decides to leverage a NoSQL database to save a certain number of hours when delivering a specific feature set, it is critical for this same developer to understand the impact of adding this new technology to the stack. This impact depends on, for instance, whether or not the enterprise has NoSQL administrators on staff and a NoSQL cluster already running. It further depends on numerous additional factors such as: Is the NoSQL database part of the automated release process? Does the product support team have NoSQL experience? What is the cost of additional NoSQL licenses and support? Does the new architecture make the software harder to deploy? Will the external test team require additional resources to accommodate NoSQL? What is the impact on the current audit process? And so on.

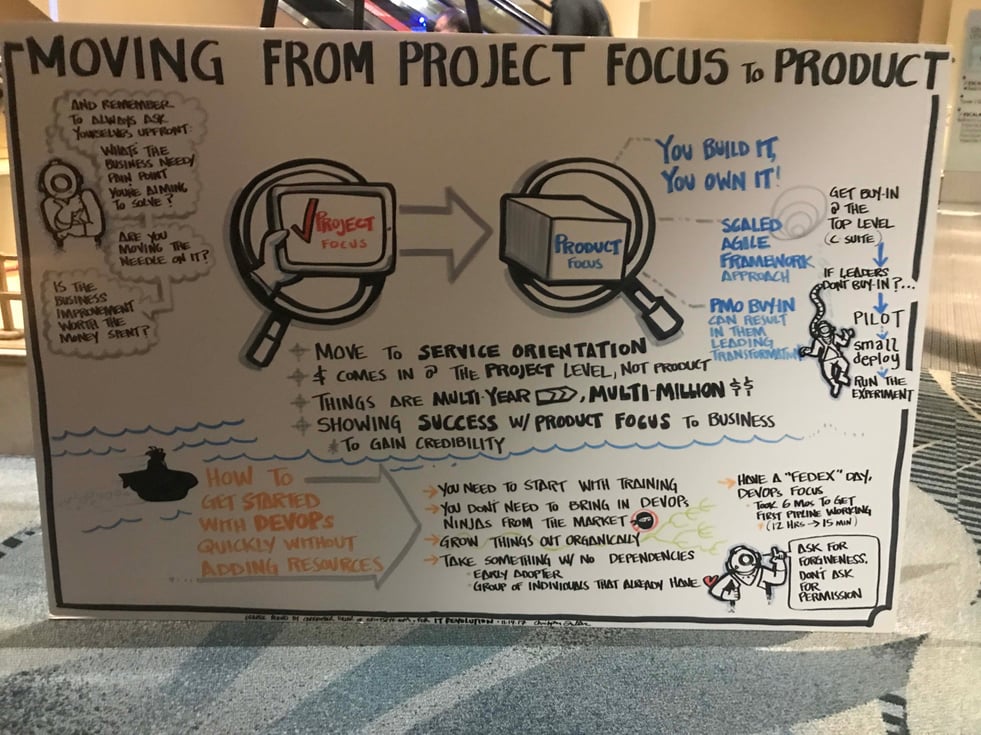

2 - Incentives must Be Aligned with Business Outcomes - Rise of the Two Pizza Teams

A rapid release schedule requires each team member to be aware of issues that point toward issues that can lead to disaster. Under pressure, software developers sometimes ‘forget’ about best practices for testability, deployment, upgrades, scalability, supportability, compliance and security. By assembling ‘two pizza product teams’ consisting of 8-12 members and by holding these teams responsible for the entire software development and operations lifecycle, it no longer pays to ‘overlook’ critical issues. Furthermore, if the entire team is evaluated by the business impact of the software they release, everyone has an incentive to work together, instead of simply ‘throwing requirements over the fence.’ One of the DOES presenters summed up this principles in the following hashtags: #YouCodeItYoubuildIt, #IfYouBuiltItYouTestIt, #YouTestedItYouDeployIt, #YouDeployedItYouOwnIt.

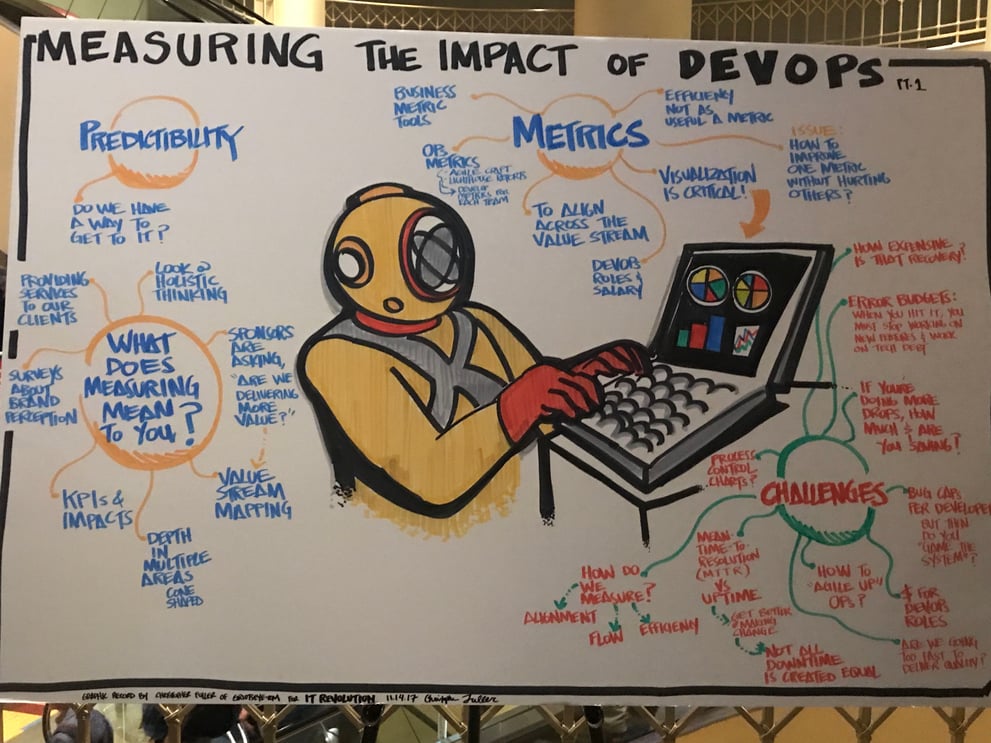

3 - We Cannot Improve what We Can’t Measure - Transparency for the DevOps Value Chain

Every organization has finite resources and therefore must focus on investing in the areas most relevant for central strategic goals such as increasing customer satisfaction and improving operating margins. Measuring the business impact of the DevOps value chain is therefore high up on the agenda of most enterprises, to enable a rational choice in terms of where to invest scarce resources. While we need software to integrate the DevOps toolchain, so that we can observe causalities between code and business success throughout the software lifecycle, we also need to encourage a culture where transparency and openness is rewarded. When failures happen, we need sufficient data points throughout the DevOps process to determine the root cause. This ability to track down root causes is critical to enable an early warning system that predicts potential problems while they can still be averted.

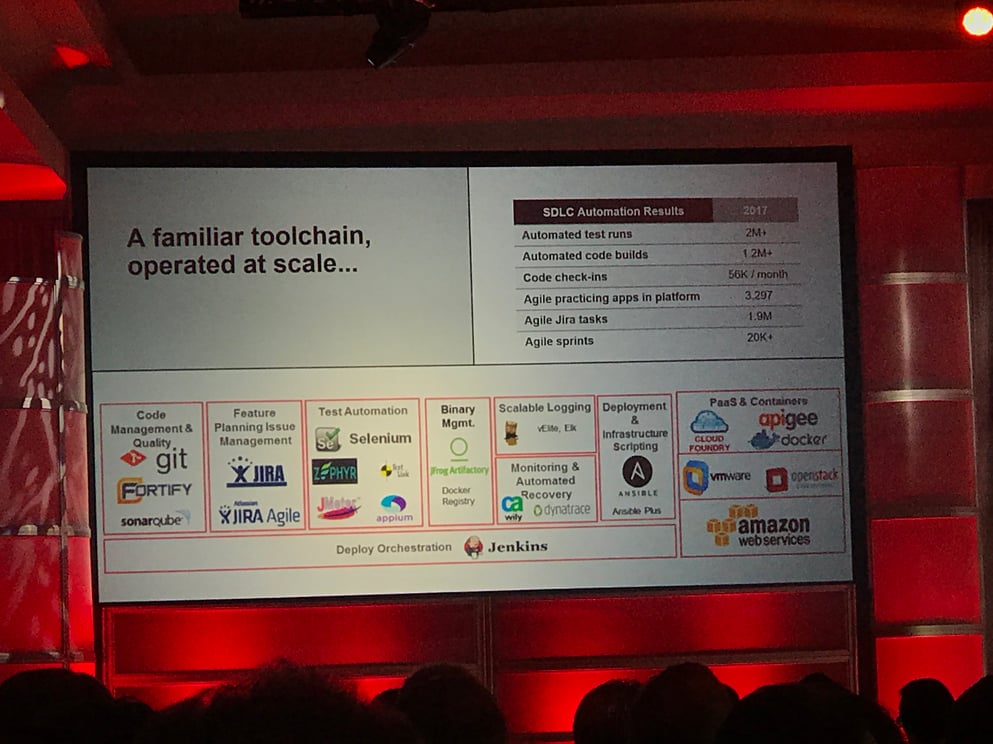

4 - Automation and Integration of the entire DevOps Toolchain is Key

Datacenter silos and a lack of automation comes with many negative side effects in terms of speed, cost, scalability, reliability, issue detection and remediation, consistency, and compliance, to only name a few. Without centralized automation and without an integrated DevOps Toolchain organizations struggle to increase their release cadence, while staying on top of cost and risk.

5 - DevOps Won’t Work with Pets - It Needs Cattle

The pets versus cattle discussion maybe old, but it is more relevant than ever. Every ‘special touch’ added to the deployment and configuration of a server is hugely detrimental to the key DevOps mission of rapidly creating microservices that are infinitely scalable and portable. Everytime, we create a server, or a group of servers that is not defined via code and automatically provisioned, we also create ‘exceptions’ that can rip holes into the DevOps compliance and security framework. Audit readiness is a key topic in rapidly changing environments. Only if infrastructure resources are consistent and therewith interchangeable will the corporate compliance team be able to continuously pull comprehensive reports that can be passed on to the auditor, almost on demand.

Everytime we call one of our friends in the data center and ask for a ‘quick and informal’ solution to an urgent challenge, not only do we divert resources from where they are needed, but we also decrease transparency. Of course, when it is 4pm and you need to push through an enhancement for a VIP customer by COB, you are rightfully tempted to circumvent process, make some calls and get things done. However, the need for this exception needs to then be recorded and discussed, in order to determine the gap in automation and determine the optimal path to fixing it.

6 - Curb Database Sprawl - Databases as first Class Citizens

Many enterprises today complain about their databases lagging behind in terms of release automation. Disconnecting the database lifecycle from the overall application lifecycle often leads to resource inefficiencies, inefficient database operations, and performance challenges. Creating separate database instances for different applications leads to the need to support numerous versions of the same database. Of course someone then has to also look out for end of life issues, separate security patches, and operating system compatibility issues. Therefore, database automation need to become part of the DevOps toolchain.

7 - The Corporate World still Runs on Batch Jobs - Job Scheduling Needs to Shift Left

Modern development and product teams often ignore the fact that ‘nothing goes’ without batch jobs. Flight bookings, online purchases, and bank transactions all rely on multiple interactions with batch jobs. Many of these still run on mainframes today. These jobs update databases, fetch files from remote locations or glue together mainframe-based applications with modern microservices. When creating cloud native applications, development teams need to consider these dependencies on batch jobs when planning their releases.