Why Machine Learning Diagnoses Cancer but Can’t Run our Hybrid Cloud

Why can machine learning and artificial intelligence reliably find cancer, for example, in CT scans (read the excellent very short MIT article), but they cannot tell me whether or not it makes sense to containerize an application, how to most efficiently provision storage pools in vSphere, or simply read out my very easy to read aquarium monitor display?

Here’s my Simple Answer

When a neural network learns to diagnose cancer, data scientists train it with a few thousand CT images that contain examples of cancerous and cancer free patients (outcome variable). Deep learning independently identifies the input variables (parameters) by repeatedly analysing the images, pixel by pixel, and comparing all of these pixel permutations with ‘cancer’ or ‘no cancer’. And exactly that is the challenge for enterprise IT.

Why It’s not so Easy for IT Operations

There are two components to this answer:

- Human brains don’t understand massive abstract data correlations: The just described pixel by pixel analysis isn’t a concept that the human brain can follow. The machine treats the CT image like a pixel matrix (basically a huge 2 dimensional space). Then it looks at the colors of each pixel in relationship to all other pixels. This requires brute force computing and once the result is there, the machine cannot explain the outcome to us humans, as these results are just abstract mathematical pixel correlations.

- The machine cannot easily learn from its mistakes: The way the machine learns how to identify cancer is to look at a sample of correctly classified (Cancer / no cancer) CT images and when it makes a mistake, it receives additional training, as the symptoms of cancer/no cancer will appear in the patient and fed back to the machine learning algorithm. However, when watching a VMware administrator, it is difficult for the machine to determine the direct result of her actions, as there are is a myriad of confounding variables and output variables. There’s also a time delay that makes things even more difficult.

As this is a pretty abstract explanation, here are two simple examples that show the complexity of enterprise IT versus cancer diagnostics:

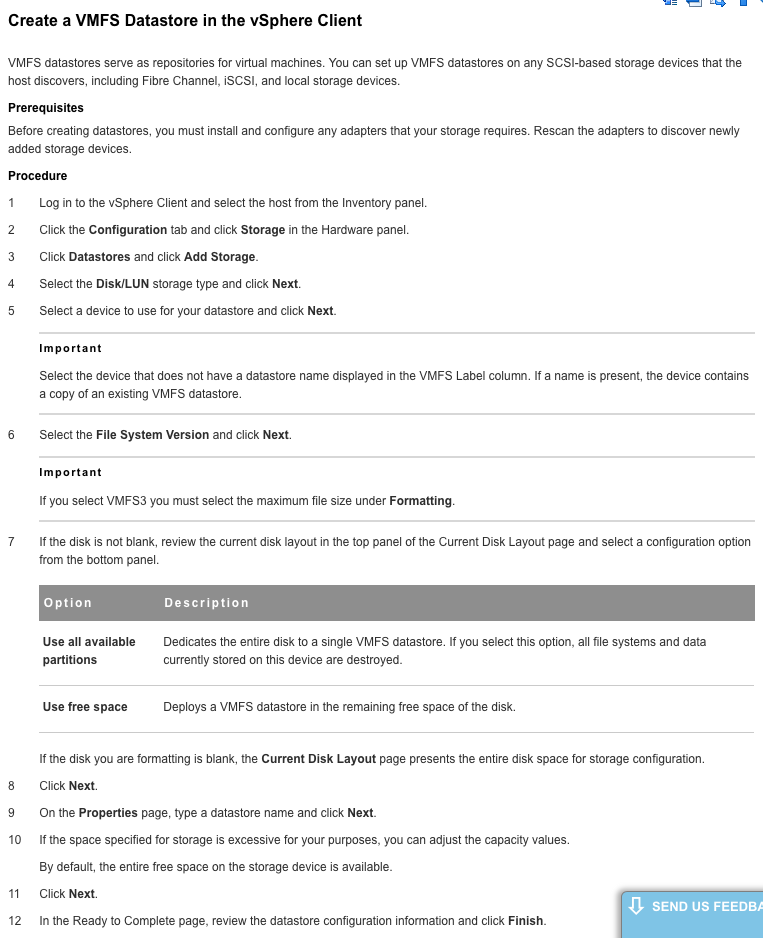

Example: The machine ‘watches’ the IT admin go through the process of creating a VMFS Datastore in the vSphere Client. Here are the steps:

You can see that most of these 12 steps seem trivial and should have absolutely nothing to do with the ultimate reliability or performance of the datastore. The tricky part for the machine is to determine the following:

- Which selections during this process are without alternative in this specific situation. E.g. they could depend on the storage hardware, the network setup, or specific performance requirements, or maybe the conscious decision of the admin to sacrifice speed for cost.

- If 2 months after this admin provisioned the storage, a seemingly unrelated app slows down, this could well be due to the choices the admin made 2 months back, but in most cases it will be entirely unrelated.

- If 6 months later the app that’s based on a VM that draws storage from this datastore performs poorly due to high latency, this could be due to the above described conscious decision of sacrifizing speed for cost or it could be due to another application running hot on the same spindle or it could be due to an hardware issue or maybe a network issue. I’m not a vSphere admin, so there are probably another dozen potential root causes and there definitely are more outcomes and more time delay options.

This is ‘coincidentally’ the starting point for our EMA Research project on machine learning and artificial intelligence in IT operations.

Excellent MIT article on this topic by Will Knight: The Dark Secret at the Heart of AI, April 11, 2017:

https://www.technologyreview.com/s/604087/the-dark-secret-at-the-heart-of-ai/

EMA Machine Learning website: https://storify.com/TorstenVolk/machine-learning-and-artificial-intelligence-optim

SaveSave